Cloud technology and virtual networking have shifted many network decisions from networking teams to developers and cloud engineers. There are many new tools and patterns to protect web applications that developers and network administrators alike must learn and understand to safely deploy production-grade applications in Google Cloud.

Google Cloud Armor is one tool to protect web applications at Google’s network edge. It can deny traffic to specific geographies, enable common WAF policies, prevent cross-site scripting (XSS) and SQL injections, block DDoS attacks, and more.

This article covers Cloud Armor use cases and common architectures. We also show you how to configure a Cloud Armor security policy with a Terraform example, and how to think about firewall changes across your environment.

Summary of key Google Cloud Armor concepts

This article addresses the following Cloud Armor topics:

| Use cases |

|

| Architecture | Cloud Armor can be placed in front of:

|

| Cloud Armor security policies | Google Cloud Armor at its core is a logical container for security policies. You can:

|

| Change management | Setting up Cloud Armor on greenfield projects is the easiest and least risky. For real-world applications you can:

|

Google Cloud Armor use cases

Cloud Armor is an excellent go-to managed firewall for internet-facing, public applications hosted on Google Cloud.

Application protection

Cloud Armor is capable of defending against both Layer 3 and Layer 7 attacks, mitigating against the OWASP Top 10 risks, and integrates natively with the rest of Google Cloud’s security services.

It also offers a premium version, Cloud Armor Managed Protection. The premium version includes all of the features in the standard version but has a fixed monthly price and data processing fees. The main reason enterprises purchase premium Managed Protection is if they are especially prone to DDoS attacks and want access to Google’s on-call DDoS response team and DDoS bill protection in the event of an attack. Additionally, certain corporate insurance policies are updating their terms to require contractual DDoS protection to mitigate financial risk.

Network protection

Cloud Armor is the first line of defense at the public internet’s edge. If your goal is to protect internal Google Cloud workloads, there are purpose-built tools to do so, including Identity Aware Proxy and VPC firewall rules. All three of these tools can be used in conjunction for a thorough defense-in-depth approach.

However, if you plan to use Cloud Armor to proxy requests via Google Cloud’s network to other public clouds or on-premises services, be sure to thoughtfully manage networking across your multi-cloud or on-premises environment. While you can place non-GCP resources as backends of a Google Cloud load balancer, it can introduce unintended risks by creating management complexity and additional latency between services.

Google Cloud Armor architecture

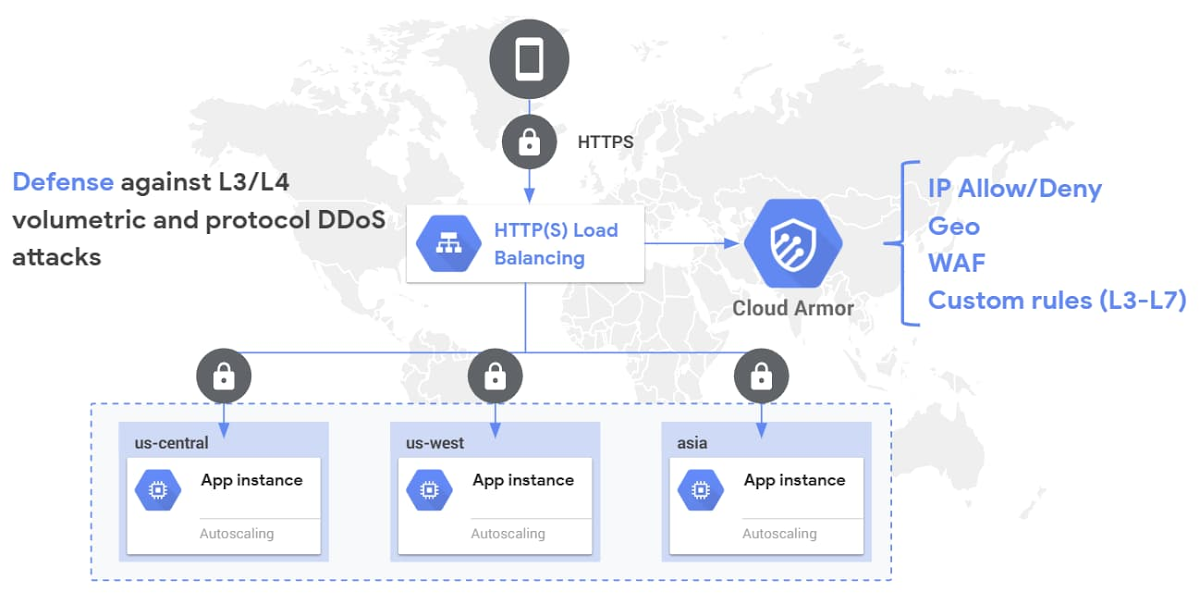

While Cloud Armor is a networking product, its implementation largely depends on the application infrastructure that you are aiming to defend. Cloud Armor can be placed in front of:

- Application Load Balancers

- Network Load Balancers

- VMs with Public IP Addresses

The image shows an architecture example of Cloud Armor’s built-in and custom security policies applied to an HTTPS Load Balancer in front of a globally distributed application. (Source)

You may notice that Google Cloud’s serverless offerings are not on this shortlist. If you want to use Cloud Armor in conjunction with Cloud Run, App Engine, or Cloud Functions, you must first leverage serverless network endpoint groups (serverless NEGs) and then place a load balancer in front of them. To simplify the management of these serverless NEGs, Google has created a Terraform module and included sample code on GitHub for a global Cloud Run deployment.

If you plan to use Cloud Armor with Google Kubernetes Engine (GKE), it is easiest to do so by using the platform’s native ingress features with a BackendConfig object, like so:

apiVersion: cloud.google.com/v1

kind: BackendConfig

metadata:

namespace: cloud-armor-example

name: my-backend-config

spec:

securityPolicy:

name: "example-cloud-armor-security-policy"

Cloud Armor security policies

The term, “security policy,” within the cloud landscape can have several applications depending on the context in which it is used. In general, security policies are clear definitions that regulate the access to or behavior of a system. Policies can be applied widely across entire IT estates, or granularly on individual resources. For example, a wide security policy may define a HIPAA-based encryption standard to ensure electronic health records are stored securely across all systems, while a granular policy may refer to a specific Organizational Policy in Google Cloud that enforces encryption settings on cloud storage services.

Because this article focuses on Cloud Armor, the usage of “security policies” is in the context of configuring Cloud Armor, and is aligned with the NIST industry standard of firewall policies. Cloud Armor a service that applies one or more security policies to a load balancer or VM. Designing security policies correctly is the most important step when deploying Cloud Armor in your environment.

Creating a Security Policy consists of four steps:

- Name the policy

- Choose the policy type and default action

- Add additional rules to the policy (optional)

- Apply the policy to a target (optional)

It is most important to design policies so that they can be scaled across your organization. Creating a consistent set of reusable policies for multiple backend services simplifies management as your cloud footprint grows.

Name the policy

Give the policy a short, descriptive name that encompasses the policy’s goal. Policy names can be a combination of lowercase letters, numbers, and hyphens. A detailed description is recommended to explain the policy’s purpose beyond the short name. It is useful when new team members join or rule names are not long enough to encompass the full reasoning behind the rule. In addition, a good description explains the policy purpose when reviewing infrastructure-as-code.

Choose the default action

Next, you have to set the default action parameter. The value depends on how aggressive you would like to get with the rule. Default allow rule actions are less restrictive, default deny rule actions are more restrictive. Greenfield projects with tight API restrictions are likely to start with deny rules, while existing projects with production traffic should be set to allow to not interrupt users.

Add additional rules

The default rule set earlier is set at the lowest priority, so any additional rule supersedes it. While adding additional rules is optional, you will find that security policies are less effective without them.

Additional rule actions go beyond allow and deny so you can throttle, ban based on request rates, and redirect traffic. Each action has specific settings to configure based on the action – for example, redirects can go reply with a 302 error or Google reCaptcha.

Each rule can also have a description. Rule descriptions are a useful way to explain why certain IP address ranges exist in the rules, or the expected behavior of the rule.

Apply the policy to targets

You can select targets in accordance to the architecture options like:

- Application Load Balancers

- Network Load Balancers

- VMs with Public IP Addresses

You can specifically attach to a load balancer or VM with a public IP address.

Similar to additional rules being optional, applying the policy to a target is also optional. However, the security policy is only effective on your resources if it has a target configured.

Policy example

Google has created a Terraform module for configuring Cloud Armor security policies. The following example shows a Cloud Armor security policy with the name “article-readers-policy” with a default rule of allow, and an additional rule of deny with a 403 error to IP addresses in the 109.173.0.0/16 block.

resource "google_compute_security_policy" "policy" {

name = "article-readers-policy"

rule {

action = "deny(403)"

priority = "1000"

match {

versioned_expr = "SRC_IPS_V1"

config {

src_ip_ranges = ["109.173.0.0/16"]

}

}

description = "Deny access to IPs in 109.173.0.0/16"

}

rule {

action = "allow"

priority = "2147483647"

match {

versioned_expr = "SRC_IPS_V1"

config {

src_ip_ranges = ["*"]

}

}

description = "default rule"

}

}

You may be wondering where the policy is applied to a target. In Terraform, this would happen on the load balancer or VM resource. In the Terraform module for a global load balancer, you’ll find a spot in the backend configuration for a security_policy. This is null by default, but should be the name of the security policy, like so:

backends = {

default = {

security_policy = "article-readers-policy"

}

}

Change management when using Cloud Armor

Setting up Cloud Armor on greenfield projects is easiest and the least risky. However, most real-world applications are already in production and require a delicate approach to changes. Cloud Armor offers several protection mechanisms to ensure you don’t excessively disrupt live site traffic such as those given below.

Preview mode

Preview mode allows you to see the effect of a new rule before it is applied. You can see the actions in Cloud Monitoring. It is highly recommended to run any new rule through preview mode before applying them in your environment. This is done by adding the --preview flag to a rule.

Pre-configured WAF rules

Pre-configured WAF rules are available to leverage Google’s knowledge of common site attacks, such as the OWASP Top 10, and are classified by their stability. You can consider the stable WAF rules as Google’s production-ready policies. Using pre-configured rules allows you to leverage Google’s opinion of malicious internet traffic instead of trying to create your own set of common rules.

Sensitivity tuning

Sensitivity tuning can be applied to pre-configured WAF rules from a range of 0 to 4, which map to the ModSecurity paranoia level. 4 is the most sensitive, and the higher the sensitivity, the more traffic will be blocked.

Additional recommendations

We recommend using Terraform or another infrastructure-as-code tool to manage security policies. This allows for version control and quick rollbacks in case a security policy has unintended consequences. Using the terraform plan command in conjunction with the --preview flag is a powerful way to understand your changes before they take place.

There is also a pre-configured Network Security Policies page in Cloud Monitoring where you can see all of the effects of your policies. Periodically checking on these logs and metrics ensures that you are not blocking traffic that should be allowed.

Conclusion

When used correctly, Cloud Armor is a powerful tool to protect the attack surface of your web applications and maintain a positive experience for end users. Creating Cloud Armor policies is a process that should be handled across workloads in Google Cloud to ensure that they can be applied in a repeatable fashion at the network edge. Policy creation and maintenance should undergo the same change management process as other network changes in your environment to ensure predictable behavior and a smooth rollback policy in the event of a misconfigured policy. Leveraging infrastructure-as-code tools to manage policies is a best practice to eliminate human error, offer a code-review process, and keep changes in a version control system.

Third-party tools like Paladin Cloud can check, remediate, and enforce compliance of your Cloud Armor security policies. The platform offers best practice approaches to prioritizing the most important security risks across multi-cloud environments. Additionally, Paladin Cloud can also set GCP-specific policies, which are tightly scoped to Google Cloud’s Organizational Policy service.