Google Cloud offers several serverless compute options, notably Cloud Run and Cloud Functions. The term “serverless” has always been a misnomer, of course—there is a server somewhere. However, the term is important because it implies a key benefit, which is that the server is fully managed, so end users don’t have to worry about it.

Before we dive too deeply into the intricacies of Cloud Functions, it’s important to understand what they are. Public cloud platforms have continued to abstract server management by introducing “container-as-a-service” platforms like Cloud Run. However, these services still require you to build and deploy a container image. Cloud Functions introduce an additional layer of abstraction by allowing developers to simply upload raw code.

The second generation of Cloud Functions is powered by the same technology as Cloud Run, allowing developers to harness the traffic splitting, concurrency, and longer processing times native to Cloud Run. Many applications with simple logic, like those currently running on VMs or inside a container, can be segmented into Cloud Functions. However, because you lose control of the underlying infrastructure, it’s important to understand the security controls that can be applied to Cloud Functions. This article reviews identity-, network-, and platform-based methods to securely deploy Cloud Functions in your environment.

Summary of key Google Cloud Functions concepts

The table below summarizes the various security-focused Cloud Functions concepts described in this article.

| Cloud Function identities | Instead of sharing the default compute engine service account across your environment, give each function boundary a unique service account to use. |

| Identity-based controls | Use IAM roles and permissions on both user accounts and service accounts to ensure that functions can only be invoked by authorized individuals or machines. |

| Ingress: network-based controls | Disallow all traffic (the default setting) and only allow internal traffic or traffic from Cloud Load Balancing. |

| Egress: Serverless VPC Access | Egress traffic from Cloud Functions can be sent via the Serverless VPC Access connector to connect to private IPs such as internal VMs or databases. |

| Cloud Secret Manager | If your function needs to retrieve sensitive values such as an API key or database password, store it in and retrieve it from Secret Manager. |

| Organization Policy Service | Ensure that the recommendations and additional security features described above are in place by using organization policies. |

| Monitoring | Metrics and alerts are provided by Cloud Functions that are similar to those of Cloud Run. |

Cloud Functions use cases

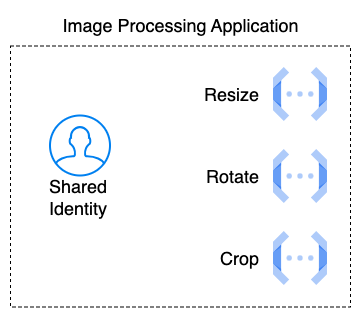

Common use cases of Cloud Functions include responding to user events or performing repeatable jobs, such as asynchronously processing files. For example, imagine that a social media site allows users to upload headshots for their profile pages. A Cloud Function may be triggered every time a new image is uploaded to resize the image to a web-friendly, standardized size and then crop it into a circle.

Running this code inside a virtual machine or using Kubernetes would be overkill for a single application or microservice, whereas using a functions-as-a-service platform would allow for segmentation and scalability. This can dramatically reduce operating costs because most serverless offerings are only billed by the number of times they are invoked and they offer additional scalability—a win/win for cloud architectures.

Cloud Functions identities

Each Cloud Function can be configured to run as a specific identity by selecting a service account at deployment time. Cloud Functions leverage Google Cloud’s underlying metadata service to generate a token and send requests securely within the platform.

For development purposes, it’s important to note that users can impersonate these service accounts to test cross-service authentication in their local environments. However, Cloud Functions cannot run as user (human) accounts.

Every new Google Cloud project includes several Google-managed service accounts by default; the specific types and number of accounts will be determined by which APIs are activated on the project. If you deploy a Cloud Function without specifying a particular service account, it will use the default Compute Engine identity provided by the Compute Engine API.

It’s dangerous to assume trust when using this single default identity since malicious code could take full advantage of the wide-reaching permissions that it may have. Instead, it’s important to delineate Cloud Functions by their purposes and define a security boundary to determine which upstream and downstream resources each should be able to access. Once this boundary has been defined, specific user-managed service accounts should be provisioned for each Cloud Function’s purpose.

For ease of resource management, multiple Cloud Functions operating within the same application or service can safely utilize a shared identity. However, additional identities should be provisioned for adequate segmentation when operating across applications in order to prevent broad lateral movement. Cloud Functions cannot operate across environments by design, and each Function, even within your own tenant, is securely segmented.

An application or API composed of three functions, each running a discrete step of a process and sharing an identity

Identity-based controls

Once the Cloud Functions and other associated Google Cloud resources have the appropriate identities provided to them, they should be assigned only the necessary IAM permissions that allow them to call each other.

To call a Cloud Function, the calling resource needs the Invoker IAM role assigned to its service account. If you’re using Terraform to deploy IAM roles, be sure to review the differences between IAM policies, bindings, and members before assigning them to resources. IAM bindings are an authoritative setting that may remove members from a role, while IAM members are non-authoritative and simply grant roles to new members. For example, the following Terraform snippet would only allow a single service account to have the Cloud Functions Invoker role, potentially blocking other safe callers from reaching the service:

resource "google_project_iam_binding" "project" {

project = "your-project-id"

role = "roles/cloudfunctions.invoker"

members = [

"serviceAccount:another-function@your-project-id.iam.gserviceaccount.com",

}

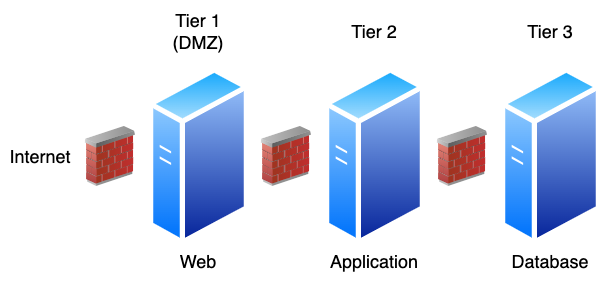

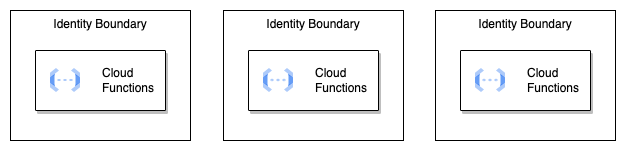

Similar to the N-tier networking architecture popularized by three-tier web applications, each Cloud Function should only be able to call the next Function or resource necessary to perform its duty using identity boundaries, as shown below.

Traditional three-tier architecture with network-based firewall security controls

The same three-tier architecture with identity as a boundary instead of a firewall

Ingress: network-based controls

By default, all network traffic can call a Cloud Function—this includes the internet! This can be risky for public services and is an especially dangerous configuration for internal services.

Instead, your Cloud Functions should be configured to allow internal traffic only or to allow internal traffic and Cloud Load Balancing traffic. There are a few differences:

- Internal Traffic Only allows traffic from other Google Cloud services, such as Cloud Scheduler or BigQuery, or any resources inside a Shared VPC network. Additionally, you can add VPC Service Controls for an additional layer of security but test new configurations using dry run mode when deploying new Cloud Functions. This is the recommended configuration if your Cloud Function serves internal traffic.

- Internal Traffic and Cloud Load Balancing includes everything above but also allows you to put a load balancer in front of your Cloud Functions. This offers a myriad of benefits, but the common use cases are custom domains with managed certificates and adding cross-region high availability. This is the recommended configuration if you need to serve external traffic.

To use Cloud Functions behind a Cloud Load Balancer, the Cloud Functions must be added to a serverless network endpoint group (serverless NEG) and the NEGs must be set as the backend target of the Cloud Load Balancer. While this does require additional up-front configuration, using infrastructure-as-code tooling such as Terraform simplifies this, and Google has created a module to make it even easier.

You can ensure that your Cloud Functions network ingress settings match your organization’s security policies with tools such as Paladin Cloud, giving you the ability to quickly identify and remediate any deployments that allow unwanted traffic.

Egress: Serverless VPC Access

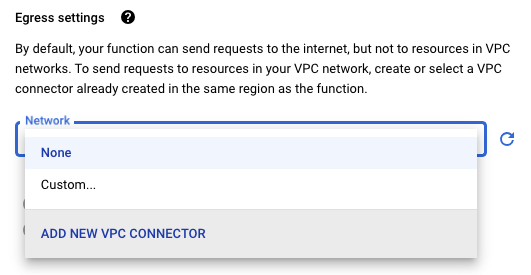

Cloud Functions perform outbound calls through Google’s public internet data center infrastructure automatically. If your Cloud Function needs to make network calls to private resources that are secured by internal IP addresses, such as a Cloud SQL database or VM, you must first specifically route the traffic to a VPC.

Google Cloud created the Serverless VPC Access Connector service to accomplish this connectivity pattern, but there is a major caveat to be aware of. It has a one-way scaling limitation—it only gets bigger! This shouldn’t be an issue if your Cloud Function serves internal, predictable traffic, but suppose you’re leveraging Cloud Functions to handle external traffic with high elasticity and scalability. In that case, the Serverless VPC Access Connector will only scale up, leaving you responsible for the bill created by the underlying VMs. If this is a mandatory, compliance-driven requirement, Paladin Cloud offers a Google Cloud Function policy to ensure that all functions are deployed using VPC connectors.

To connect to a private Cloud SQL database from a Cloud Function:

- Create a Serverless VPC Access Connector in the same project and region as the Cloud Function.

- Configure your Cloud Function to use the connector in the Function’s Connections settings.

Egress settings dropdown in the Cloud Functions configuration page

- Connect using your Cloud SQL instance’s private IP on port 5432.

This pattern will allow a direct TCP connection without using the Cloud SQL Auth Proxy, a slightly different connectivity pattern than Cloud Run.

It is also possible to assign a static public IP address to a Cloud Function, but it does require adding a Cloud NAT to your architecture, adding cost and complexity with limited use cases.

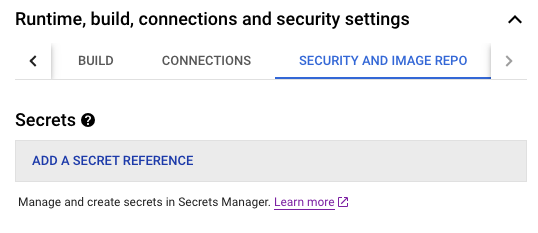

Cloud Secret Manager

If your Function requires access to any sensitive values, such as API keys or database credentials, they should be retrieved from Google Cloud Secret Manager instead of being hardcoded in source code or environment variables.

Secrets referenced from Secret Manager can either be mounted as a file volume or set up as an environment variable exposed to the Cloud Function runtime. To understand how this is deployed from within the Cloud Console, click on the Security and Image Repo settings:

Image Caption: Security settings in the Cloud Functions configuration page to add a Secret.

The Function’s associated service account will need the Secret Accessor IAM role to retrieve the secret successfully. For more information about Google Cloud Secret Manager, see this article.

For additional security, you can protect Cloud Functions data at rest with a customer-managed encryption key (CMEK) saved in Cloud KMS.

Organization Policy Service

Organization policies allow administrators to create and enforce platform rules for end users, and many of the previous key points in this article can be configured using the Organization Policy Service. As of this writing, four policies can control the behavior of Cloud Functions:

- Allowed Cloud Functions Generations: This should be set to second-generation functions for maximum security.

- Allowed Ingress Settings: This should be restricted to internal or internal and cloud load balancing traffic.

- Allowed VPC Connector Egress Settings: This should be enforced if your company requires all traffic to go through a VPC network and should be used in tandem with the next policy.

- Require VPC Connector: This restriction requires a VPC connector to be selected at deployment time and ensures that existing functions are compliant.

It’s important to verify the IAM roles in your environment so that organization policies cannot be edited by non-administrator users. Unfortunately, this is a common mistake in new cloud setups, and end users can simply disable the organizational policy enforcement that they don’t agree with.

Monitoring

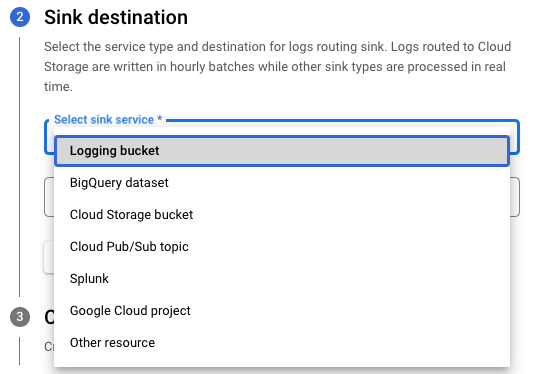

Because second-generation Cloud Functions run on the same infrastructure as Cloud Run, the same monitoring data is available in the Logs Explorer. This includes standard logging, error reporting, and platform metrics that can provide extensive visibility for security-focused teams.

If your Cloud Function performs a unique task with a high volume of critical events and you’d like to segment its logging, you can create a separate logging destination or update the logging sinks to redirect the Cloud Function’s data, as shown below.

Sink service destinations in Cloud Logging

Changing these monitoring settings can be particularly useful if you need to send metrics to a third-party security tool for metrics-based alerting or responding to security events.

Summary of key concepts

Whenever you embrace a functions-as-a-service (FaaS) tool, you must be aware of vendor lock-in. It can be more difficult to transfer code from a function-based platform than a container- or VM-based platform. There are several ways to benefit from Cloud Functions while keeping them as secure as other cloud-based infrastructure.

Adopting a defense-in-depth approach that focuses on each layer of the technology stack, along with codified policies and segmented responsibilities, will allow your organizations to deploy Cloud Functions safely. Cloud Functions can primarily be secured at the identity and network layers, offering developers all the benefits of serverless computing without creating additional security headaches.

Paladin Cloud offers several Google Cloud policies to enforce specific deployment patterns for all Cloud Functions in your environment. These policies allow developers and security teams alike to quickly identify and remediate non-compliant resources, allowing organizations to confidently leverage the benefits of Google’s serverless computing tools while ensuring their runtime environments are secure.