As a company grows, the amount of data it generates and stores grows along with it. The data will not only grow in volume through the company’s day-to-day operations but also in terms of the classes of data it uses due to organic expansion like new projects or features. Accordingly, a multi-faceted approach must be followed to ensure the complete protection of a company’s data.

Data security breaches can ruin a company’s reputation and threaten its viability or future commercial or financial deals. According to the latest available data, approximately 60% of enterprise data is stored in the cloud. This article provides insights into best practices that enterprises can use to improve their data security.

Summary of enterprise data security best practices

| Best practice | Description |

|---|---|

| Use data encryption | Data encryption, at rest and in transit, must be done end-to-end and with the correct encryption algorithms. |

| Focus on network security | Network access and architecture must be instituted to protect sensitive data and processes. |

| Implement threat detection and monitoring | Threat detection and monitoring must pick threats up as quickly as possible, with enough information and context to respond appropriately. |

| Improve company culture and user education | The weakest component of any system is its users. Educating users and creating a security-conscious culture seeks to address that issue. |

| Conduct security audits and regular security scans | Proactively detect threat vectors in your company introduced by system and procedural changes by regularly poking holes in them and fixing what you find. |

| Institute access control procedures | Access control is only as effective as the processes managing it. Ensure that access is granted and revoked properly. |

| Classify data | Correct data classification allows companies and their users to effectively focus security efforts. |

| Ensure regulatory compliance | Complying with external requirements ensures that data stays safe and that companies are free to do business without the risk of fines or regulatory limitations. |

Enterprise data security best practices

Use data encryption

Data encryption involves three major facets that must be addressed: encryption of data at rest, in transit, and in use.

How best to encrypt data at rest, such as in a database or file store, depends heavily on the storage medium and the access required to the data. Encryption for data stored in a database that’s constantly accessed should be transparent to the database client and systems. Microsoft’s SQL Server Transparent Data Encryption (TDE) is a good example of this type of technology. Encryption of files stored in a long-term archive doesn’t need to be so transparent: AWS KMS keys or even PGP encryption can be used for this purpose. AWS S3 file storage is encrypted transparently and (by default) using Amazon S3 managed keys, while Microsoft Azure Blob storage uses 256-bit AES encryption.

Encrypting data in transit is heavily dependent on the protocol being used. For normal web traffic, Transport Layer Security (TLS) is often used with HTTP (the combination is called HTTPS) and is the industry standard in encryption—readily available and fairly simple to implement. Streaming security camera feeds, which sometimes use the Real-Time Streaming Protocol (RTSP), might pose a bigger problem since there’s no associated security protocol for RTSP. A solution to consider for protocols that don’t support encryption is using an SSH or SSL tunnel through which the traffic can be proxied.

Although encryption for data in use is mainly a hardware concern, it’s become increasingly important to pay attention to it from an enterprise perspective. Data in use usually refers to data stored in a system’s memory, which includes certificates and encryption keys that are used in the encryption of other data elements. The compromise of these elements can lead to the compromise of entire systems, so technologies like full memory encryption and CPU-based key storage should be explored to mitigate hardware attacks. Paladin Cloud security policies can ensure that your AWS, Azure, or GCP cloud accounts are following encryption best practices. For example, you can deny access to S3 buckets via HTTP and ensure that your EBS volumes are encrypted at rest.

Focus on network security

When designing systems and enterprise networks, you want to minimize the attack surface as much as possible. Data that cannot be reached cannot be compromised. A company’s network must be as closed as possible, exposing only the minimum services that need to be accessed externally. For example, if a system is only used on the company VLAN, why expose it externally?

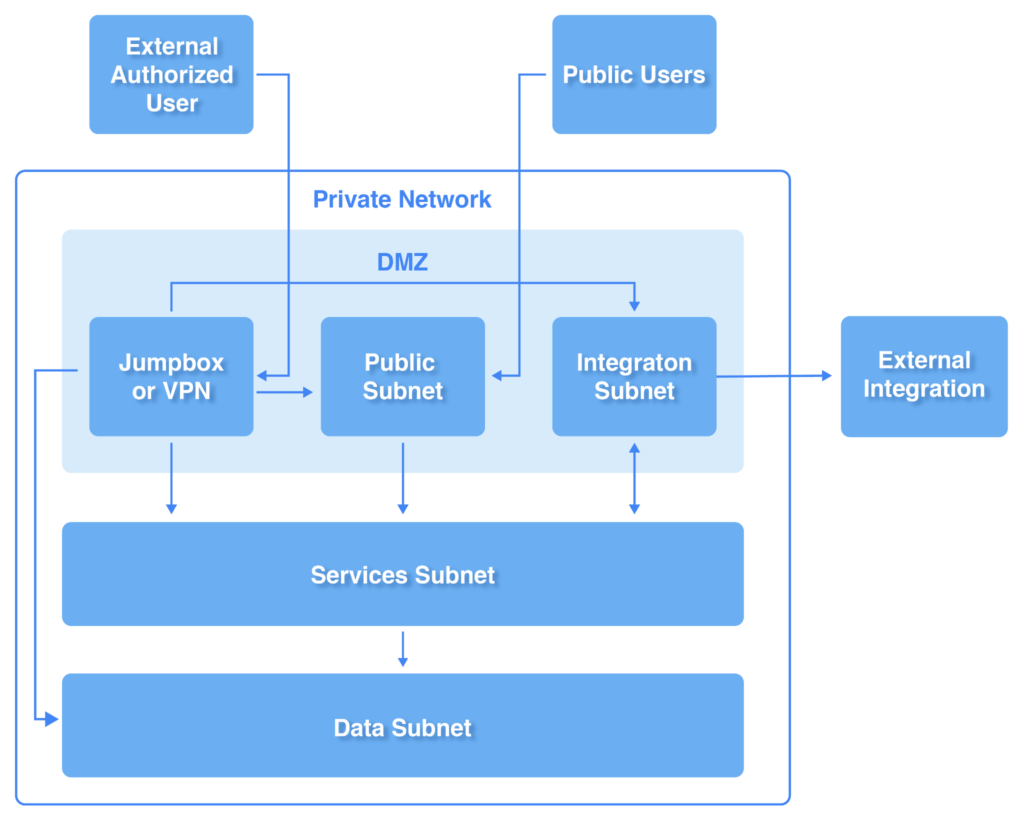

The diagram below depicts a typical subnet architecture used by software systems and illustrates several concepts that can be applied when architecting a secure enterprise network.

A simple network architecture showing the separation between various concerns

Tips to keep in mind when creating a network architecture:

- Use subnetting to create separate network segments.

- Keep all public services or potential attack vectors, like outside integrations, on separate subnets (sometimes called DMZs).

- Place services, data storage, and integrations in separate subnets.

- Tightly control communication between subnets through firewalls and minimal route setups.

- Provide limited and controlled access to the private network segments through VPNs or jumpboxes.

Isolating various concerns into separate subnets significantly increases the effort for an attacker to access sensitive areas of your system or network. Should a public or integration service be compromised, the attacker’s access to the data layer would be restricted since the DMZ can only access the data subnet through the services subnet.

Authorized access is allowed through the use of a VPN, with sufficient security and authentication implemented. Since the chosen access method will have access to various parts of the network, extra care must be taken to lock it down as much as possible. This is especially critical in hybrid and cloud environments, since the cloud environment is essentially exposed to the internet, and a misconfiguration in your DMZ, access method or even just a single EC2 instance can create gaps in your security.

Implement threat detection and monitoring

Threat detection is an ongoing and ever-changing concern since both changes in an organization and the discovery of new attacks and vulnerabilities can expose new threats. Organizations must invest time and money into the identification, prioritization, and remediation of the most important security risks because it only takes one slip or vulnerability to bring a company to its knees.

Threat detection and monitoring consists of the following components:

- Security information and event management (SIEM): Collect and analyze log and event data for relevant security events.

- Intrusion detection systems (IDSes) and intrusion prevention systems (IPSes): Detect potential malicious behavior on networks and systems.

- Endpoint detection and response: Detect potential malicious behavior on specific endpoints such as laptops and servers.

- User and entity behavior analytics: Identify anomalies in user behavior through the analysis of user patterns.

- Data loss prevention (DLP): Specifically detect and prevent the loss, misuse, or unauthorized access of sensitive data information in an organization.

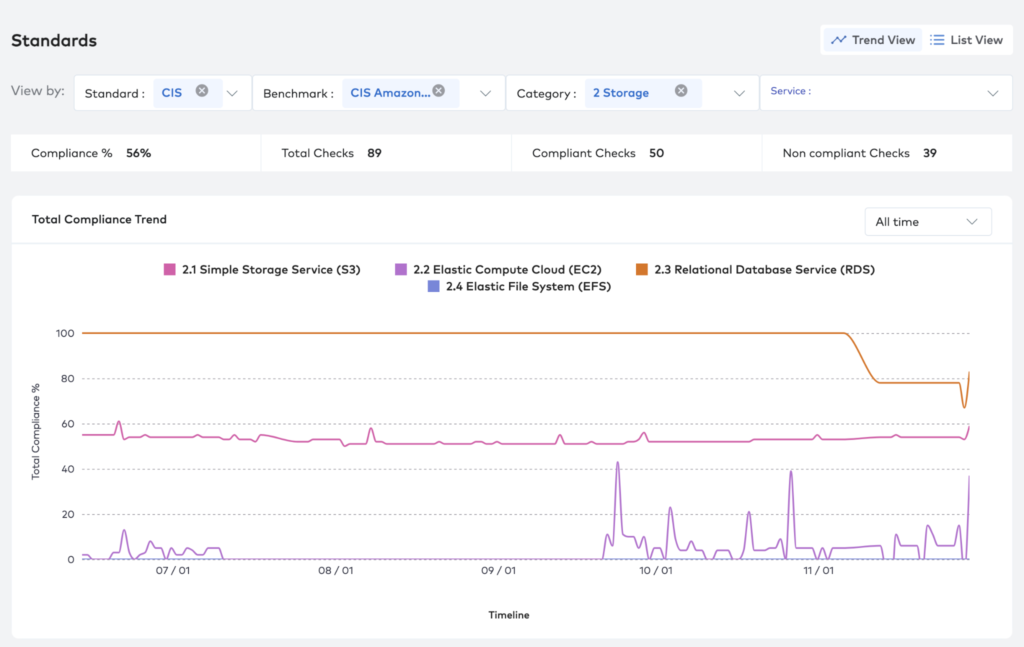

The image below shows an example from Paladin Cloud of compliance monitoring CIS data storage benchmarks against different AWS storage services. The total compliance percentage against time is shown for AWS S3, EC2, RDS, and EFS.

An example of monitoring CIS data storage benchmarks against different AWS storage services from Paladin Cloud.

Improve company culture and user education

The weakest component in the protection of any system is usually the human element: Our level of attention to detail and discipline in enforcing requirements fluctuate with how hungry, tired, or excited we are. Under the right conditions, we can be sweet-talked into bending the rules. To counter this, security awareness needs to be ingrained into the company culture and users’ daily routines.

Several simple steps can greatly reduce the chance of human attack vectors:

- Promote the use of company-approved password managers.

- Enforce good password policies, such as minimum complexity and regular changes.

- Provide multifactor authentication methods for all systems and enforce their use.

- Encourage users to log out of and lock their PCs when not at their desks.

Over time, user security awareness will fade as the pressures of work and personal life mount. Regular training sessions and simulations should be scheduled to ensure that security is top of mind for your employees and any new developments or attack vectors can be communicated to employees.

Conduct security audits and regular security scans

Any change—be it in personnel, procedures, or systems—can introduce new attack vectors. Regular scans of the more exposed attack surfaces in an enterprise can help proactively identify any security risks. Since they’re done at regular, predefined intervals, this helps pick up any risks introduced due to organizational changes.

The two most common attack surfaces to scan are user interfaces and network infrastructure. User interfaces are, by definition, easy to access, making them an easy target. Common issues like insecure communication and information leakage can be picked up by these scans and addressed through remediation processes. Network interfaces need to be scanned, both internally and externally. External scans will find open ports or pinholes that should not be open, and internal scans can pick up insecure services that need to be patched.

Security audits can no longer be periodic scans limited to reviewing assessment results and logs. A variety of security tools provide findings and policy violations related to vulnerabilities, misconfigurations and your general data security posture, all of which need to be integrated and considered. Generative AI-powered tools, such as Paladin Cloud, can aggregate and correlate these findings into a holistic view to help your organization prioritize and remediate the most important data security posture management risks. As an example, tools like this can help connect a critical vulnerability on a data store in the cloud with the sensitive data classification of the data contained in that store. The correlation of these two findings drives enhanced prioritization of security risks.

Institute access control procedures

Change, from a technical perspective, is usually well managed through change control and code repositories. Organizational change, however, doesn’t necessarily translate that well to the technical realm. It’s important to have stringent user onboarding and offboarding procedures that ensure that users who have left the company or changed roles don’t have residual access to systems or data.

Using a centralized access control system like Active Directory greatly simplifies access control management. Regular audits of user lists and access and login behavior should form part of a company’s regular and scheduled security activities. The proliferation of single sign-on (SSO) support in various systems has made the use of centralized access control a no-brainer for any organization using multiple systems.

Classify data

Data can be organized into various classes requiring different security, archiving, data recovery and sanitization measures. Certain classes would also need to comply with government-mandated regulations. It’s good practice to define the various data classes for your company and to then associate the necessary actions and checks for each class.

Understanding the various data classifications in your organization makes it easier to implement data security posture management (DSPM) and also makes DSPM integrations that much more effective. In a cloud context, tools such as AWS Glue can be used for both data cataloging and labeling or tagging.

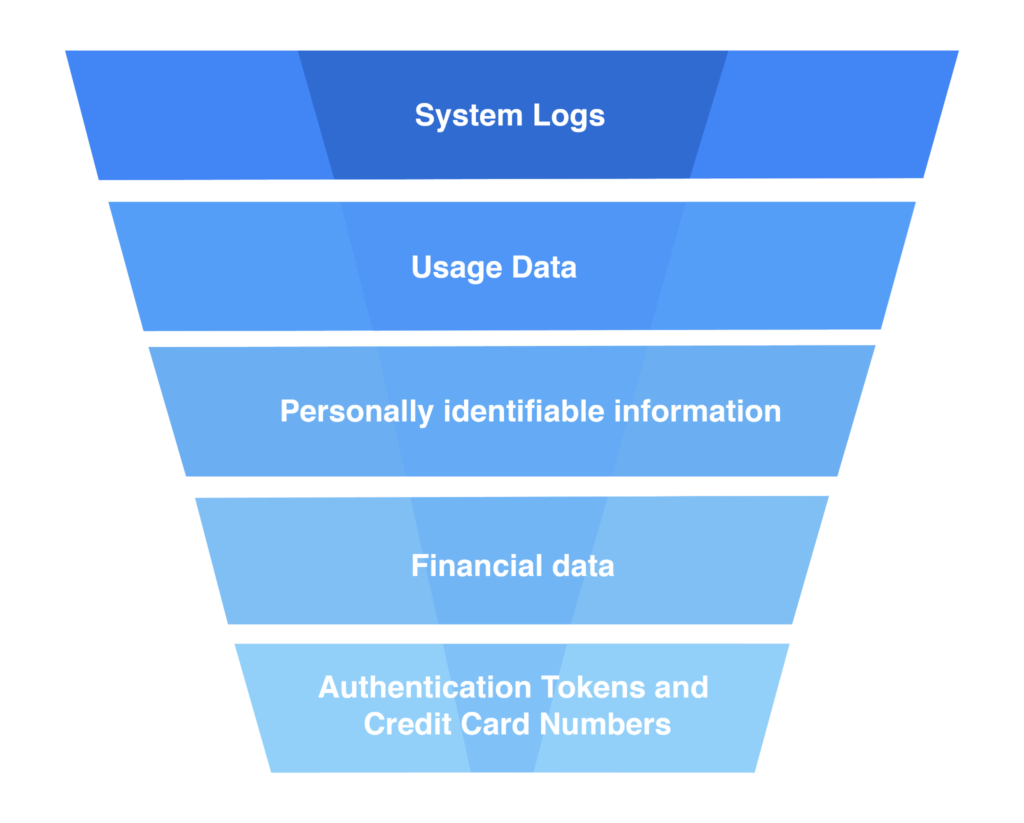

Common classes include:

- System-generated data such as logs, usage data and traces

- Personally identifiable information such as first names, last names, and identity numbers

- Financial data such as sales figures, expenditures, and revenue forecasts

- Operationally sensitive data such as authentication tokens and credit card details

Typically, the higher-volume data classes require less effort to secure and sanitize as long as care is taken to prevent data from more sensitive classifications leaking into less sensitive classifications. A common example of this happening is the logging of authentication tokens or credit card numbers to application logs.

An organization also needs to be very clear on the disaster recovery requirements for each class of data. Some classes will require little to no recovery processes, while other classes will require a formal disaster recovery plan, with regular verification of said plan through dry runs and checks.

The sensitivity of data typically increases as the volume of data decreases

Another typical example of sensitive data being moved to less strictly controlled data environments is when production or real data is moved to a testing or staging environment. This is usually done to aid in development and testing, and the data is often exposed to third parties such as testers or contractors. Without a proper sanitization process in place, this can easily expose sensitive data classes to unauthorized personnel.

Ensure regulatory compliance

Regulatory compliance can be tricky for a small organization due to the legal and technical expertise required to address it properly. This becomes even more complicated if a larger organization operates across countries or regions with differing regulatory requirements. An organization doing business in the USA might comply with the necessary state and federal requirements until it gets a government contract, at which point it will be necessary to spend even more time and resources on complying with the government-specific regulations.

Here are some common types of regulatory requirements with suggested methods to comply:

- Access to data: Users and customers have the right to access and manage their data, usually through a request to an appointed officer in a company. Creating a self-service portal for users to do this reduces the administrative burden on the company and its personnel.

- Protection of data: A user’s right to privacy is the primary driver for protection-of-data regulations. The best practices described in this article go a long way toward protecting user and company data.

- Data sovereignty: Some countries require that data generated within their jurisdictions stay there. In some cases, this can also include data about their citizens. The proliferation of multinational cloud providers makes complying with this requirement easier, but it can be more expensive.

Conclusion

These best practices, which cover networking, systems, infrastructure, processes and the human element, should be reviewed and applied consistently at regular intervals to ensure data security in an organization. They are by no means exhaustive, and further research on these topics is encouraged. It is also advisable to consider using third-party providers or software with a focus on enterprise data security to enhance your security efforts so that you can focus on your core business. Tools such as Paladin Cloud provide a unified, contextualized view of multi-cloud security risks, including correlating findings from application and data security platforms.

Data security is by no means a single task that can be completed once and then ticked off your to-do list. Review and audit your classification of data to determine whether sensitive data is being handled securely. Security continues to be a moving target due to the constant changes in the industry, attack vectors and security practices, making it difficult to ensure that you’re 100% covered without outside help. However, one thing that stays constant in cybersecurity is that the cost of preventing a breach is always lower than dealing with the consequences of one occurring. Fortunately, there’s a large body of tools and best practices available to guide organizations in securing their data.